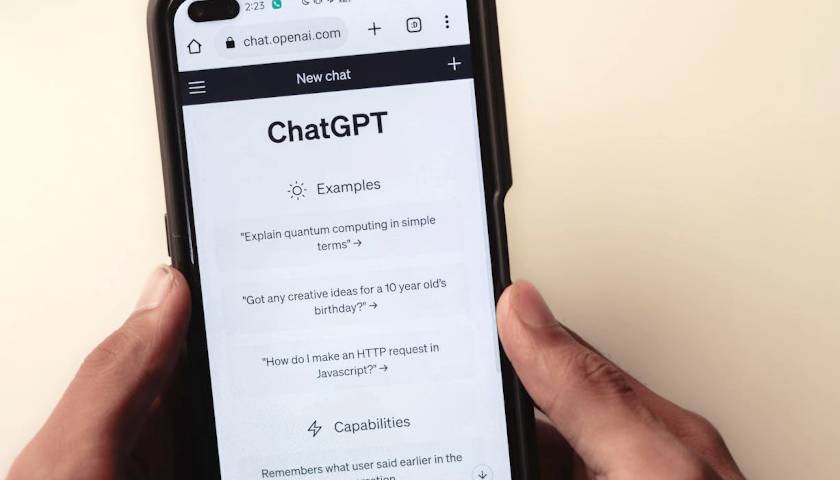

AI-powered image generators were back in the news earlier this year, this time for their propensity to create historically inaccurate and ethically questionable imagery. These recent missteps reinforced that, far from being the independent thinking machines of science fiction, AI models merely mimic what they’ve seen on the web, and the heavy hand of their creators artificially steers them toward certain kinds of representations. What can we learn from how OpenAI’s image generator created a series of images about Democratic and Republican causes and voters last December?

OpenAI’s ChatGPT 4 service, with its built-in image generator DALL-E, was asked to create an image representative of the Democratic Party (shown below). Asked to explain the image and its underlying details, ChatGPT explained that the scene is set in a “bustling urban environment [that] symbolizes progress and innovation . . . cities are often seen as hubs of cultural diversity and technological advancement, aligning with the Democratic Party’s focus on forward-thinking policies and modernization.” The image, ChatGPT continued, “features a diverse group of individuals of various ages, ethnicities, and genders. This diversity represents inclusivity and unity, key values of the Democratic Party,” along with the themes of “social justice, civil rights, and addressing climate change.”

Read More